Photo Contest 2023

Please vote by December 6, 2023, for your favorite research photo.

Photo Contest 2023

The image captures our research team beside a SARIT Electric Vehicle during a break from night-time field tests. Outfitted in safety gear, we epitomize the collective endeavor that powers our project. Our research integrates AI vision into SARIT EVs, aiming to bolster road safety and urban mobility. The vehicle in the photo is fitted with the advanced camera systems under evaluation. This hands-on approach allows us to refine algorithms and system performance through rigorous testing.

Concrete outcomes include faster response to traffic conditions and adaptability to Canada’s challenging climate. The image embodies both the human commitment and the technological innovation driving our research forward.

Concrete outcomes include faster response to traffic conditions and adaptability to Canada’s challenging climate. The image embodies both the human commitment and the technological innovation driving our research forward.

This photo captures a late-night hustle in our workshop, where we’re engrossed in tweaking our SARIT vehicle. It’s more than a garage; it’s where tech meets tenacity to make cities safer. Our research focuses on integrating AI vision into SARIT EVs, aiming to safeguard everyone on the road. This aligns with the UN’s Sustainable Development Goal 11: safer, sustainable cities.

The image is a visual narrative of the hard work and planning behind our innovations. It shows that change isn’t just about technology; it’s about the people driving it.

Practically, we’re working to:

• Reduce low-speed accidents through smart obstacle detection.

• Increase vehicle responsiveness to sudden changes, like a pedestrian crossing.

• Ensure affordability and resilience against Canada’s diverse climate.

This photo is a snapshot of the dedication that’s turning our vision into reality.

The image is a visual narrative of the hard work and planning behind our innovations. It shows that change isn’t just about technology; it’s about the people driving it.

Practically, we’re working to:

• Reduce low-speed accidents through smart obstacle detection.

• Increase vehicle responsiveness to sudden changes, like a pedestrian crossing.

• Ensure affordability and resilience against Canada’s diverse climate.

This photo is a snapshot of the dedication that’s turning our vision into reality.

Captured under York University’s iconic light rings, our research team takes a moment post-nighttime tests of SARIT Electric Vehicles (EVs). This image isn’t just aesthetic; it reflects our mission to revolutionize urban transport through AI and cutting-edge camera tech integrated into SARIT EVs. These aren’t just buzzwords; they have real-world impact. Our AI vision system operates in real-time, crucially adapting to low-light scenarios—a key time for road incidents.

Our work dovetails with the UN’s Sustainable Development Goal (SDG) 11: safer, sustainable urban spaces. The tech we’re developing aims to minimize road accidents and improve emergency response, initially on campus, with broader urban expansion in sight.

The photo is more than pixels and light; it’s the human element in our tech journey, symbolizing that ultimate success lies in collaborative innovation.

Our work dovetails with the UN’s Sustainable Development Goal (SDG) 11: safer, sustainable urban spaces. The tech we’re developing aims to minimize road accidents and improve emergency response, initially on campus, with broader urban expansion in sight.

The photo is more than pixels and light; it’s the human element in our tech journey, symbolizing that ultimate success lies in collaborative innovation.

Discover QuantimB, where science and art converge in our quest to advance brain tumor research. Our photo showcases our dedication.

The glowing brain above the desk captures attention, symbolizing our commitment to neuroscience. Photoshop enhances the impact, highlighting our mission’s significance.

Central to the image, the brain-shaped mousepad features three labeled segments, representing our core work: brain tumor segmentation. Each color-coded label signifies different research aspects, from tumor detection to classification and treatment planning. It mirrors the intricate puzzle we aim to solve. Through image analysis and advanced algorithms, we strive for better brain tumor understanding, driving improved diagnoses and treatments.

The glowing brain above the desk captures attention, symbolizing our commitment to neuroscience. Photoshop enhances the impact, highlighting our mission’s significance.

Central to the image, the brain-shaped mousepad features three labeled segments, representing our core work: brain tumor segmentation. Each color-coded label signifies different research aspects, from tumor detection to classification and treatment planning. It mirrors the intricate puzzle we aim to solve. Through image analysis and advanced algorithms, we strive for better brain tumor understanding, driving improved diagnoses and treatments.

The image captures the Parallel robot writing out the word “LASSONDE”.

This research aims to design a parallel robot (i.e., a robot with two or more arms) at the mesoscale (millimeter to centimeter scale). To accommodate for this scale the typical screws in a robot are replaced with flexible bodies and motors are replaced with piezoelectric benders (i.e., a small-scale actuator). This image represents the ability of the robot to successfully move and carry out specific motions.

The applications range from micro-scale manufacturing, micro-surgery and scanning microscopy.

This research aims to design a parallel robot (i.e., a robot with two or more arms) at the mesoscale (millimeter to centimeter scale). To accommodate for this scale the typical screws in a robot are replaced with flexible bodies and motors are replaced with piezoelectric benders (i.e., a small-scale actuator). This image represents the ability of the robot to successfully move and carry out specific motions.

The applications range from micro-scale manufacturing, micro-surgery and scanning microscopy.

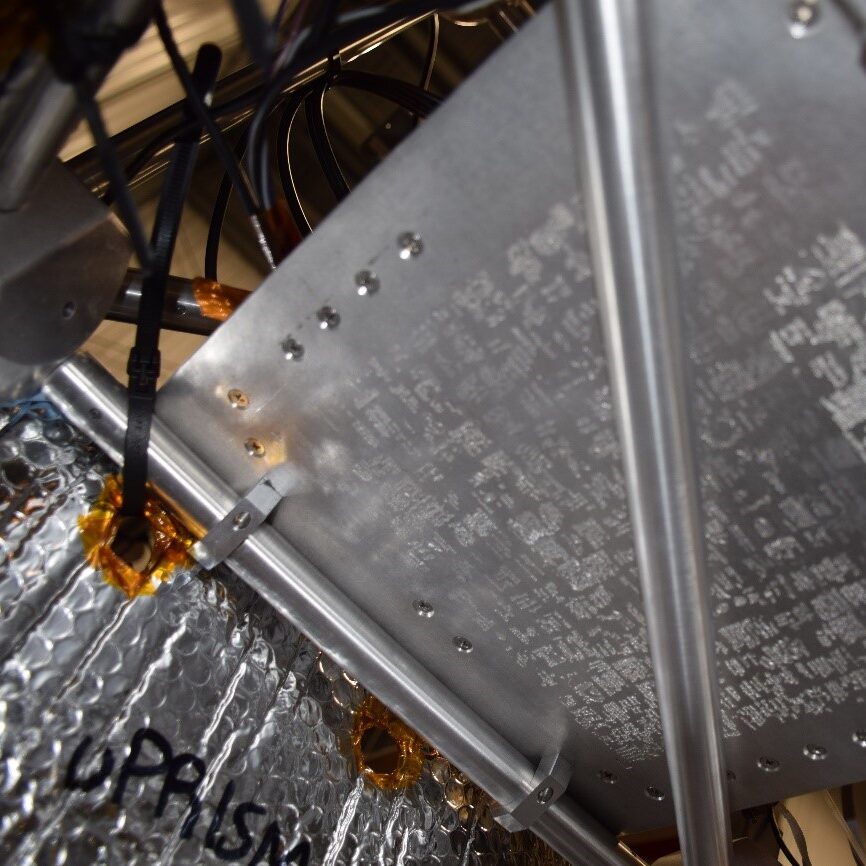

RSONAR II, a 4U Cubesat, is the result of a collaboration between York University’s Dr. Regina Lee (ESS) and Dr. Joel Ong (AMPD), with a mission to advance space sustainability through “Space Situational Awareness and Us.” This initiative features artistic exhibitions based on Professor Lee’s research, exploring space’s environment, orbiting objects, and satellite-based space surveillance. During March Break, “Satellites & You” captivated Ontario Science Centre visitors, encouraging youth to compose messages for near-space aboard Dr.Lee’s nanosatellite. The image displays the engraved interface plate, which integrated these messages into the Canadian Space Agency’s system, sending them to the edge of space during the satellite’s mission. The interface plate is now part of The Life Cycle of Celestial Objects Pts. 1 & 2 exhibit.

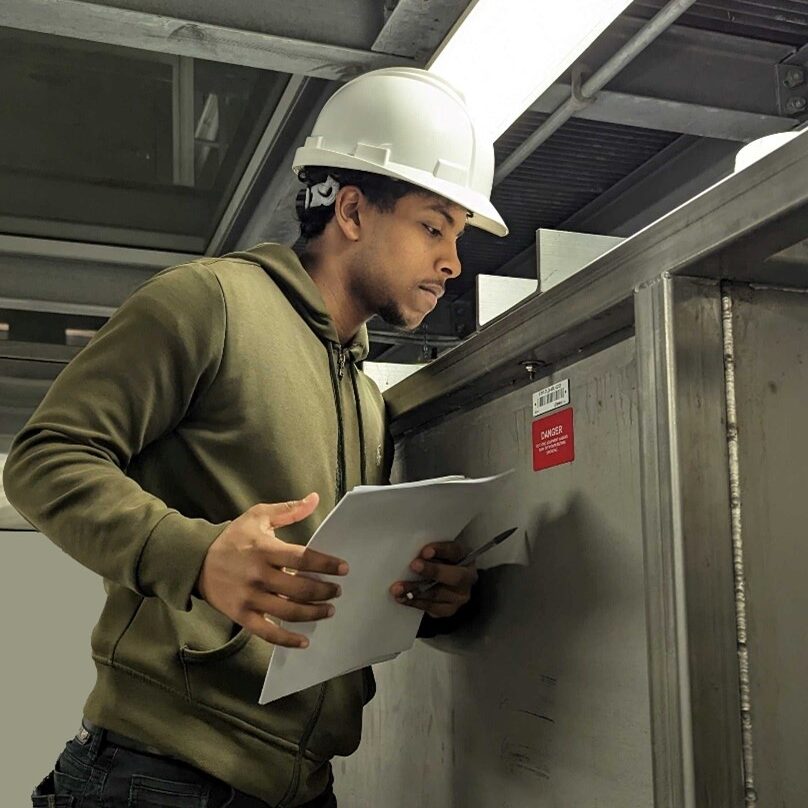

This image features myself looking into a tank and recording data located at the Toronto Water R.C Harris Water Treatment Plant. My supervisor and I were fortunate to be granted a tour of the pilot plant on site at this water treatment plant. A pilot plant is small scale replica of the full scale plant used in the testing and simulation of a system/technology. The results can be used for the design and optimization of full scale plants. This project was in collaboration with the Ajax Water Treatment plant team located in the Durham Region, who is looking to rejuvenate their pilot plant on site. Our team was tasked to assist with this project. By touring the Toronto Water pilot plant, we were able to learn how it was originally designed and operated in order to potentially apply our findings to the Durham Region pilot plant.

The image vividly depicts a dense forest, but instead of traditional trees, the forest consists of towering scrolls, each representing a privacy policy. These scrolls appear vast and potentially overwhelming. At the entrance of this metaphorical forest, there’s a user – an ordinary person – standing with a magnifying glass in hand.The magnifying glass signifies their effort to understand and make sense of the massive amount of information before them.

Our research aims to simplify app privacy policies, making them more accessible and comprehensible to the general public.

Applications:

By creating concise and clear privacy policies, app developers can enhance trust and user satisfaction, leading to better user retention. Our research may also be used to analyze trends in different industries to see which companies are more likely to have unethical information handling practices.

Our research aims to simplify app privacy policies, making them more accessible and comprehensible to the general public.

Applications:

By creating concise and clear privacy policies, app developers can enhance trust and user satisfaction, leading to better user retention. Our research may also be used to analyze trends in different industries to see which companies are more likely to have unethical information handling practices.

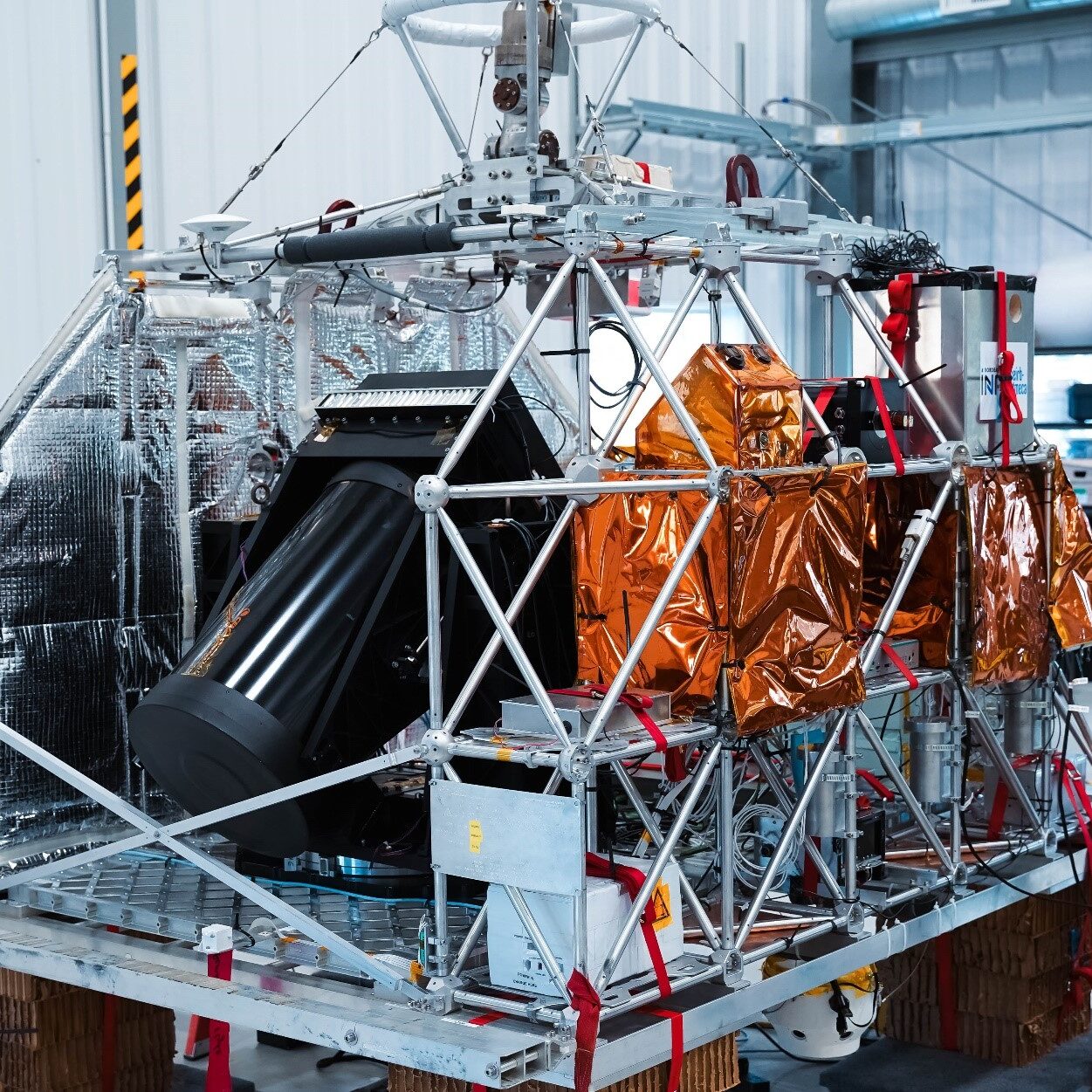

Image shows a stratospheric balloon prior to launch carrying scientific research experiments around sunset. The image depicts the smaller of the two balloons used for launch. The goal of this balloon is to stabilize the gondola that our experiment is on and will deflate after launch. Our team was in the field at the stratospheric balloon base in Timmins, Ontario working on our payload that was launched on a similar balloon. We built a cubesat that captured images of Resident Space Objects (RSOs), such as satellites, rocket bodies, and debris from the stratosphere. The balloon went up to about 36 km high carrying our experient with it. The mission was a success and our payload was successfully recovered.

Our research focuses on Space Situational Awareness, which aims to identify and track space objects orbiting Earth. As a technology demonstration for future satellite missions, a payload containing multiple cameras and sensors was flown onboard a stratospheric balloon. In this image, the payload in the orange film (front, top shelf) is integrated and ready to fly 40 km into the stratopshere to take images of the night sky. Our goal is detect satellites moving across the stars in real-time and eventually process which object we are looking at. In addition, with the hope of inspiring the next generation, over 2000 signed messages from children were taken during a presentation at the Ontario Science Center and etched onto the payload.

Our research focuses on Space Situational Awareness, which aims to identify and track space objects orbiting Earth. As a technology demonstration for future satellite missions, a payload containing multiple cameras and sensors was flown onboard a stratospheric balloon. In this image, the balloon is preparing for its 40 km climb through the atmosphere where it will remain afloat throughout the night. During this time, our cameras capture images of satellites passing across the stars. The goal is then to detect these objects in real-time and eventually process which object we are looking at. In addition, one dedicated camera was flown in order to take images of the sunset and sunrise, as is shown here. Finally, with the hope of inspiring the next generation, over 2000 signed messages from children were taken during a presentation at the Ontario Science Center and etched onto the payload.

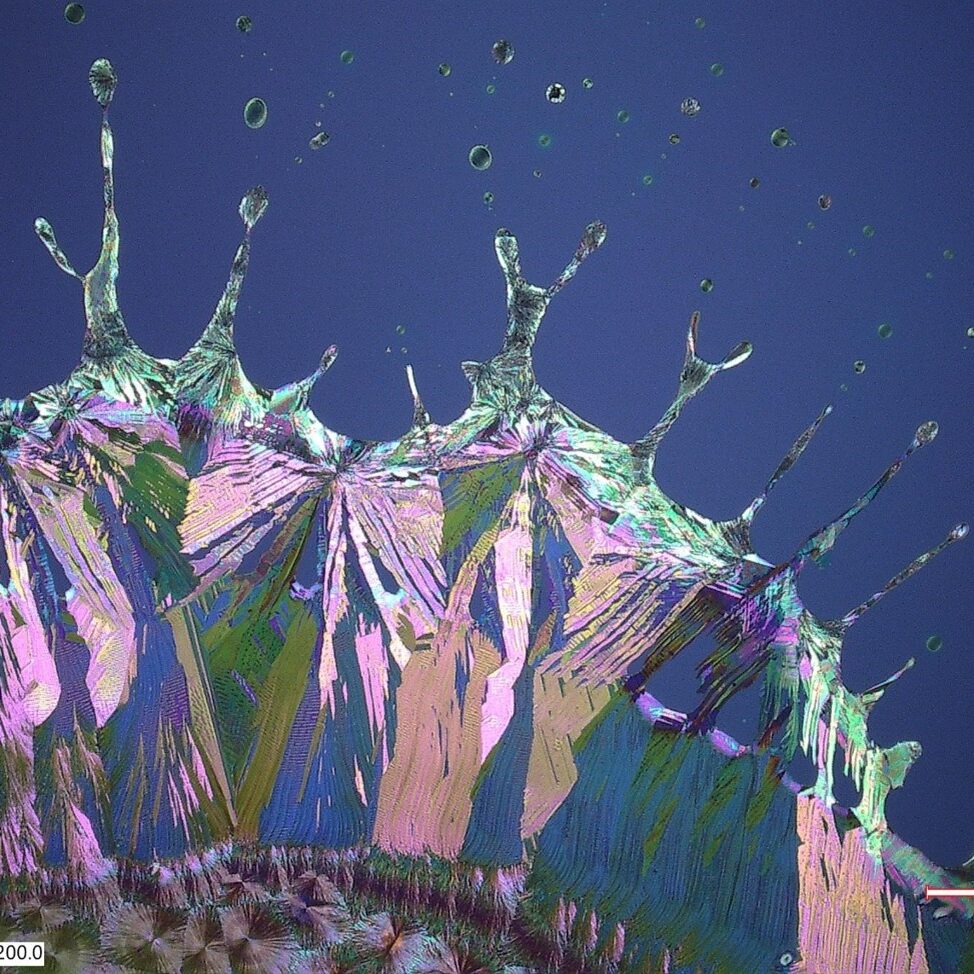

The image shows the edge of a sample. The sample comprises of Silicon Wafer Substrates coated with a blend of a polymer called Polystyrene and an Organic Semiconductor called TIPS-Pentacene using a Blade Coater. We see different colours in the image because of the crystals that are formed are turning the polarized light from the microscope giving this very abstract image. The purpose of this research is to optimize the process of manufacturing Organic Thin Film Transistors(OTFTs). This image is indicative of the surface energy of the Silicon Wafer after our surface treatments which basically means how well the ink sticks to the Wafer(In an ideal scenario it will stick to the Silicon Wafer completely but that is not the case we see). We see an edge of the film formed that is before the edge of the Silicon wafer i.e. the surface energy is not enough to attract and hold the ink.

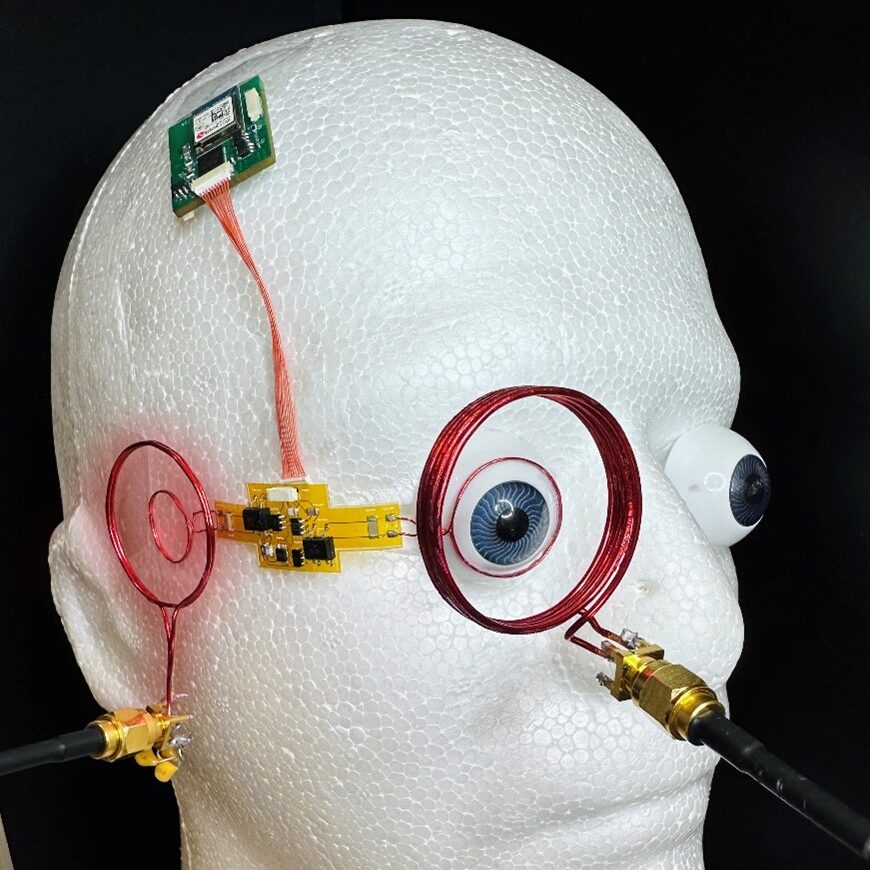

Revolutionizing Sight: Wireless Retinal Implant for Overcoming Degenerative Vision Loss

The research presented is part of an NSERC (Natural Sciences and Engineering Research Council of Canada) grant to develop retinal implants for overcoming Degenerative vision loss. The proposed system can be easily implantable and wirelessly recharged to provide an efficient, low-cost alternative to clinical treatments.

A pair of power and a data coil connected with a flexible printed circuit board, and a retinal implant on the top of the head show the system ready to implant.

Retinal Degeneration causes people to lose vision by age. The photoreceptor inside the eyeball degenerates and gets weak with time, so the chain of vision signal gets weak. The proposed retinal implant regenerates spikes to stimulate the brain neurons. It bridges the gap by assisting affected people to restore their vision sense.

Famous research applications such as Boston Retinal Prothesis, Argus II, and MIT.

The research presented is part of an NSERC (Natural Sciences and Engineering Research Council of Canada) grant to develop retinal implants for overcoming Degenerative vision loss. The proposed system can be easily implantable and wirelessly recharged to provide an efficient, low-cost alternative to clinical treatments.

A pair of power and a data coil connected with a flexible printed circuit board, and a retinal implant on the top of the head show the system ready to implant.

Retinal Degeneration causes people to lose vision by age. The photoreceptor inside the eyeball degenerates and gets weak with time, so the chain of vision signal gets weak. The proposed retinal implant regenerates spikes to stimulate the brain neurons. It bridges the gap by assisting affected people to restore their vision sense.

Famous research applications such as Boston Retinal Prothesis, Argus II, and MIT.

The image captures a charming scene of the SARIT Electric Vehicle, a compact and visually appealing automobile. SARIT is parked against a backdrop of lush greenery and clear blue skies, symbolizing its harmony with nature. Its sleek and cute design is evident, showcasing its modern yet friendly aesthetics. The absence of a traditional exhaust pipe emphasizes its eco-friendly nature.

Our research centers around advancing sustainable transportation solutions for the modern world. The image of the SARIT Electric Vehicle holds a pivotal role in our investigation. We’re exploring how electric vehicles like SARIT can revolutionize urban mobility while addressing environmental concerns and What technologies we can add to this vehicle to make it more efficiently.

Our research centers around advancing sustainable transportation solutions for the modern world. The image of the SARIT Electric Vehicle holds a pivotal role in our investigation. We’re exploring how electric vehicles like SARIT can revolutionize urban mobility while addressing environmental concerns and What technologies we can add to this vehicle to make it more efficiently.

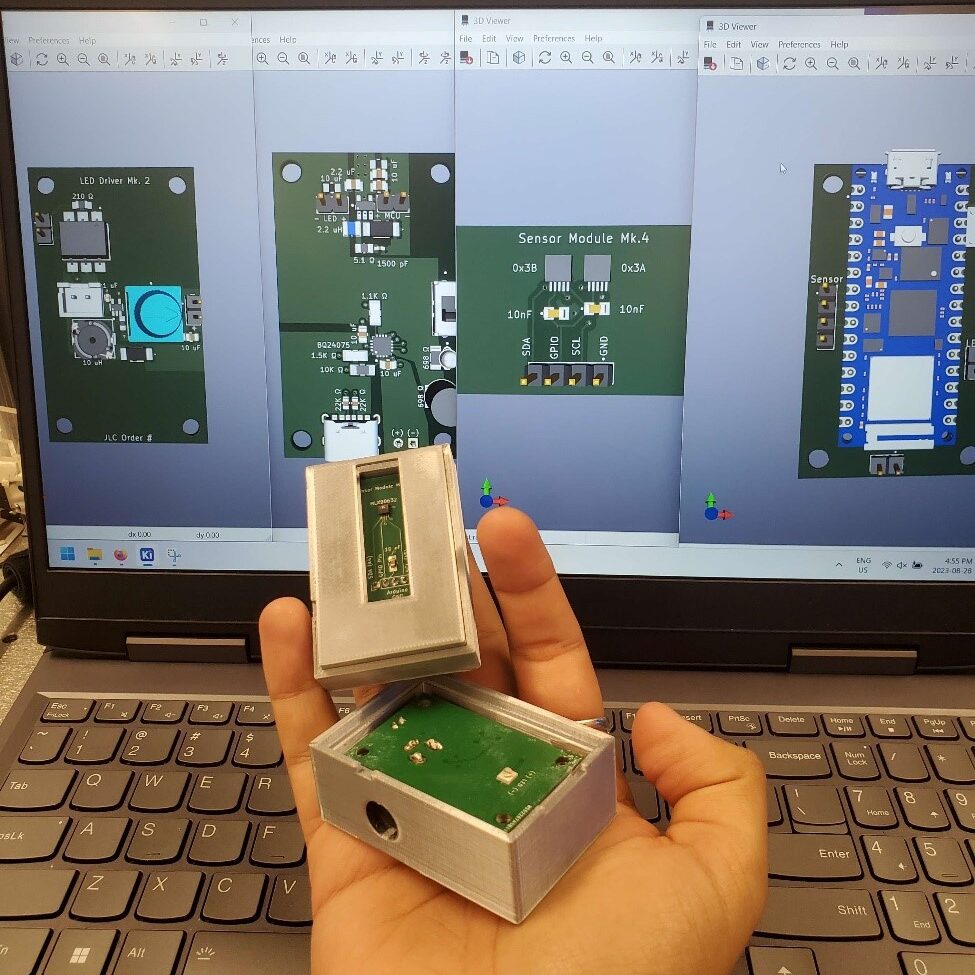

Physical Prototype of Low-Cost, Rapid, LFA Sensor Platform + Future PCB Designs!

This is the 1st physical prototype of a low-cost, rapid, and portable Arduino-based sensor platform. It uses Lateral Flow Assays (LFAs), a cheap paper-based testing method to quickly detect and clearly quantify of a given concentration in a given sample. LFAs have a wide range of applications but here, they will be used to detect the concentration of THC compounds. This can help vastly improve the speed and reliability at which current lab cannabis tests are conducted, for instance at a roadside traffic stop or by self-testing.

The overall design (above) has 4 PCB modules that snugly fit together and were designed and ordered during the summer. It starts off with two high-power LEDs being brought against the given LFA sample, two thermal sensors, a microcontroller and switch to start and process the test results, and a battery management board to provide power to the device.

This is the 1st physical prototype of a low-cost, rapid, and portable Arduino-based sensor platform. It uses Lateral Flow Assays (LFAs), a cheap paper-based testing method to quickly detect and clearly quantify of a given concentration in a given sample. LFAs have a wide range of applications but here, they will be used to detect the concentration of THC compounds. This can help vastly improve the speed and reliability at which current lab cannabis tests are conducted, for instance at a roadside traffic stop or by self-testing.

The overall design (above) has 4 PCB modules that snugly fit together and were designed and ordered during the summer. It starts off with two high-power LEDs being brought against the given LFA sample, two thermal sensors, a microcontroller and switch to start and process the test results, and a battery management board to provide power to the device.

Streets and intersections around you are firstly built in traffic simulation software (e.g., Vissim) prior to actual construction enabling engineers to conduct traffic/travel time analysis. A traffic simulation software uses mathematical equations to simulate human behavior which may not always reflect the real-world behavior accurately. For example, it may not be able to capture the subtle variations in a driver’s speed or immediate change of direction to avoid a collision. Also, it cannot capture weather conditions and nighttime/daytime condition. Now, imagine if you can virtually drive inside a traffic simulation software. We created the connection between a traffic simulation software and a game engine where a human wears a virtual reality headset and virtually drive inside the traffic simulation software. This approach captures human behavior, weather, nighttime/daytime condition.

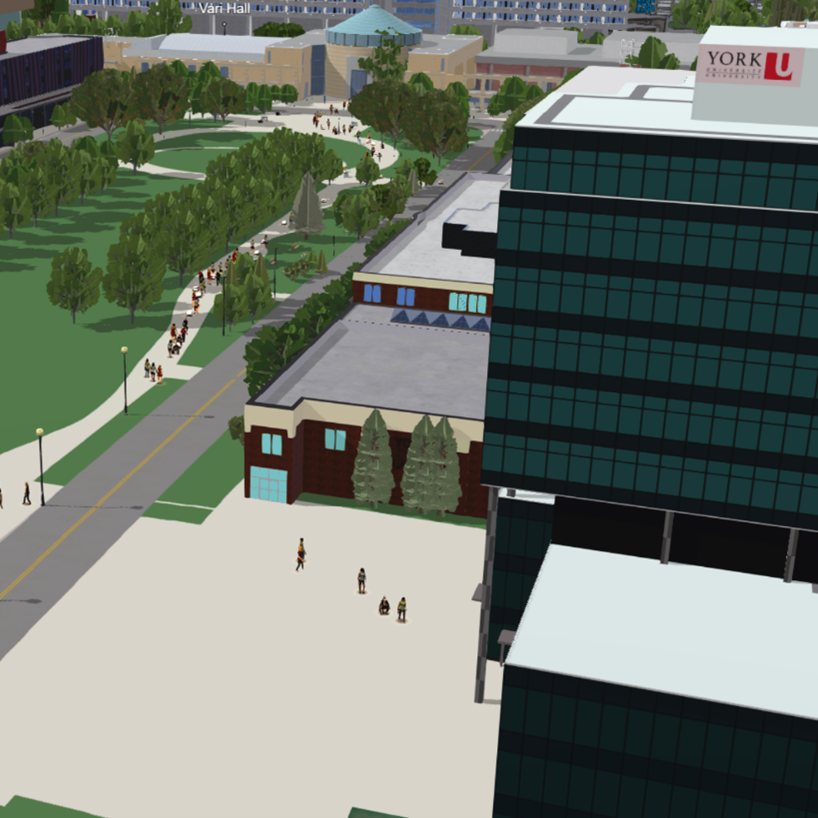

In the image, you’ll see a vivid, 3D-rendered sidewalk bustling with life, akin to a typical scene at York University. The unique aspect here is the inclusion of small, autonomous delivery robots navigating amidst human pedestrians and wheelchair users. It captures the moment just as a robot calculates a path around a group of chatting students, highlighting the dance of shared public space.

My research focuses on ensuring that as robots become more common in our urban areas, they do so in a way that is safe and harmonious for everyone involved. We built a digital twin of a York University environment and simulated various interactions between robots and humans. This image serves as a snapshot of that simulation, allowing us to observe and analyze real-world dynamics in a controlled setting.

My research focuses on ensuring that as robots become more common in our urban areas, they do so in a way that is safe and harmonious for everyone involved. We built a digital twin of a York University environment and simulated various interactions between robots and humans. This image serves as a snapshot of that simulation, allowing us to observe and analyze real-world dynamics in a controlled setting.

It is a computer-generated image showing a very colorful environment. This research aimed at subjective quality assessment of two visually lossless codecs (DSC and VDC-M) for high dynamic range images in 2D and stereoscopic 3D (S3D) viewing conditions. A set of images were generated to span a range of content, including monocular zones, overlay, fine textured surface, to challenge the codecs. This image is used for this research and rendered to include specific features including fine textures, foliage, bright colors which can challenge the codecs’ performance. S3D content including this image is mostly used in Augmented reality(AR)/Virtual reality(VR) applications. This research protocol paves the way in developing AR/VR image quality assessment protocol, and it is important for understanding compression effect on the AR/VR user experience and for the development of codec.

This is picture shows the pipes leading to a Tap House in the remote settlement of Itilleq, Greenland. Itilleq has a dwindling population of 91 with 20-30 households. Residents collect potable water at these Tap Houses for use at home and only 3 buildings in the community have a piped water distribution system. We took a 1 hour boat ride to reach Itilleq and it took us some effort to find and locate all the Tap Houses by following the pipes from the treatment plant. Even the residents didn’t know for sure how many Tap Houses were in the settlement. Many residents would like to improve the water and wastewater infrastructure on the settlement to encourage the younger demographic to return home to the settlement and impress cruise ship tourists. We are researching improvements to their water source, water distribution network, household hygiene, and wastewater system.

We captured a multi-exposure fusion photo of a rustic clock and a boat imposed against the Toronto skyline at night from Toronto Islands. Cameras are typically limited by the range of brightnesses they can capture. At night, longer exposures contain detail in the city skyline while shorter exposures capture detail on closer objects like the clock. However, there is no single image that captures the whole scene with good detail. Thus, we capture a stack of images at different exposure times (brightnesses). Then, we fuse the images to keep the good parts from each image. Smartphone cameras use similar procedures in certain scenarios to capture images known as HDR (high dynamic range). Our research group is interested in how to improve HDR images and also extend these methods to video.

In this scene, we capture a bunch of multi-colored felt spheres (Loraxes) in a light box. This allows us to create algorithms that more accurately reconstruct color for both scientific and aesthetic purposes. A current limitation of camera design is that fine color information can be lost or distorted. We purposefully create scenes that force a camera to fail and work to create algorithms to restore color faithfully. Additionally, we pride ourselves on creating data with high visual impact; beautiful images stir attention around strong research. Creating this type of data has been just as much a artistic endeavor as it has been a scientific one; I have captured scenes with many mediums like yarn, felt, beads, and even LEGO.

My name is ******** and I’m from the outskirts of Colorado Springs, USA. Where I’m from is where the horses ride and the deers prance. Even as I read these words, I pronounce them in a midwestern accent. Toronto is a world that is larger than comprehension for someone from my background. I am currently doing research in autoexposure for computational photography and have recently captured a dataset and algorithm suite that I am excited to present at Paris in the International Conference on Computer Vision 2023. Our dataset allows for simulating exposures for complex lighting conditions in video. Part of my exploration is understanding how cameras work in dynamic lighting conditions and capturing data with the camera that is around my shoulders. This was a night where I got to enjoy research but also appreciate the beauty of a city I have started to call my own.